Persistent Memory in LLMs: India’s AI Edge in 2025

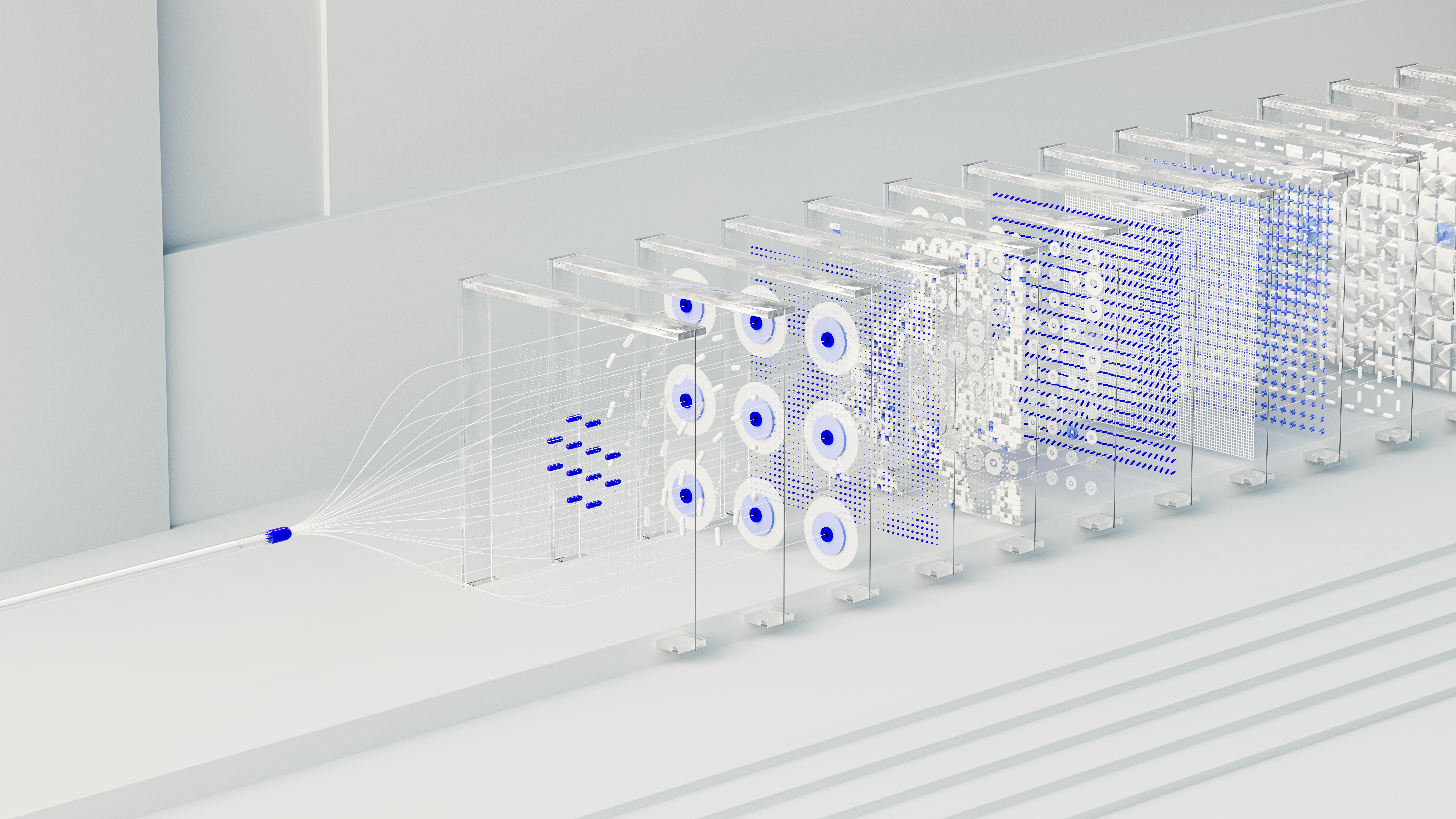

India’s AI boom is unstoppable, with the market projected to reach $7.8 billion by 2025, driven by large language models (LLMs) powering everything from chatbots to predictive analytics. But traditional LLMs have a glaring flaw: they’re forgetful, resetting after each session like a goldfish in a digital bowl. Enter persistent memory in LLMs—a breakthrough allowing models to retain information across interactions, mimicking human-like recall. For Indian developers in Bengaluru, educators in Delhi, or startups in Hyderabad, this means building smarter AI that remembers user preferences, conversations, and contexts. As 5G blankets the nation and 900 million users embrace digital tools, persistent memory is set to supercharge Bharat’s AI applications, from personalized e-learning to efficient customer service. Let’s explore how persistent memory in LLMs is reshaping India’s tech landscape, making AI more intuitive and impactful.

Challenges and the Road Ahead in IndiaNo innovation is flawless. Implementing persistent memory in LLMs demands robust data infrastructure to avoid biases or overloads, a hurdle in bandwidth-scarce rural areas. Fine-tuning without it remains a debate—can memory be “solved” via RAG alone, or does persistent storage hold the key? For India, upskilling is vital: platforms like upGrad offer courses (from ₹5,000) on memory-augmented LLMs, preparing freshers for roles paying ₹10-20 lakh at firms like Infosys. The future? Latent memories in LLMs could enable even deeper persistence, turning AI into expert replacements in fields like law or medicine. With events like India AI Summit 2025 spotlighting prototypes, Indian innovators are leading—think Mem0 frameworks scaling for enterprise. Persistent memory isn’t just tech; it’s the bridge to AI that “remembers” India’s diversity, solving real problems like personalized education or fraud detection.

In conclusion, persistent memory in LLMs is India’s ticket to AI supremacy, blending efficiency with empathy. Whether you’re a student coding your first bot or a founder scaling an app, dive in—experiment with tools like Haystack on GitHub. As Bharat surges toward a $1 trillion digital economy, this tech ensures our AI doesn’t forget the human touch. What’s your take on persistent memory? Share below and join the conversation!

Comments

Post a Comment